Empowering Autonomous Machines + Robotics with Perception

A look at perception and the critical role this layer plays in the autonomy stack

In Part Two of this series Dr Lee explains the components of a full autonomy stack and the critical concept of the perception layer of autonomy.

Part 2

"A system is fully autonomous if it is capable of achieving its goal within a defined scope without human interventions while adapting to operational and environmental conditions."

— US National Institute of Standards and Technology (NIST)

The End-to-End Approach to Autonomy

In the realm of robotics and autonomous systems, the concept of the autonomy stack is fundamental. This stack is typically divided into three main layers:

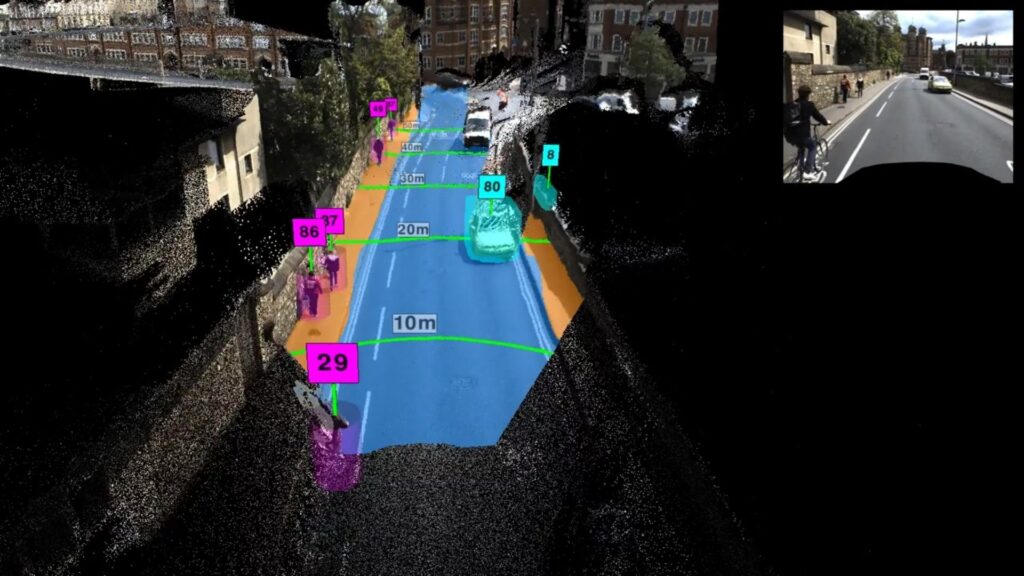

1. Sensing and Perception

2. Planning and Decision

3. Control and Actuation

1. Sensing and Perception

The first layer, Sensing and Perception, is crucial as it enables the machine to be aware of its surroundings. This awareness involves gathering sufficient information to understand the environment, including the machine itself and the objects it may interact with. Sensing involves capturing raw data, such as images from cameras. Perception, on the other hand, encompasses the steps needed to interpret this environment, including extracting spatial and semantic information from the raw inputs.

2. Planning and Decision

The second layer, Planning and Decision, is task-specific. It depends on the particular goal for which the machine has been designed. For example, an autonomous truck might need to follow a leader, position itself relative to a target location, or collect and transfer payload material. The decision-making process is tailored to the specific circumstances; an autonomous vehicle on a racetrack requires a different decision policy compared to one on a public road, a mine site, or a bush track.

3. Control and Actuation

The third layer, Control and Actuation, involves translating decisions into mechanical and physical actions. These actions could include accelerating, decelerating, steering, or adjusting the position and orientation of each joint of a robotic arm or a manoeuvrable conveyor belt. This process creates a feedback loop that continuously senses, perceives, plans, decides, controls, and actuates.

These layers collectively form the end-to-end approach to autonomy, providing a structured framework for developing autonomous systems.

Integrating AI into Autonomous Systems

In recent years, there have been significant advancements in machine learning-based AI. The challenge now is to determine the best way to integrate machine learning into robotics and autonomous systems. Within the end-to-end approach to autonomy, there are two primary methodologies for applying machine learning: Monolithic AI and Modular AI.

Monolithic AI for End-to-End Autonomy

In one extreme, the monolithic AI approach involves using a single deep neural network (DNN) to convert raw sensing inputs, such as camera images, directly into control-actuation signals. In this scenario, the layers of Perception, Planning, Decision, and Control are entirely encapsulated within the machine learning model, relying heavily on the data used for training. When this approach works, it can be highly effective, producing surprising results. However, its downside is a lack of explainability when it fails.

Modular AI for End-to-End Autonomy

In the other extreme, the modular AI approach implements each layer of the autonomy stack separately. This method allows for parts of the stack with well-defined rules (such as physics, geometry, and traffic laws) to be described by deterministic logic-based AI, while still leveraging data-driven AI from trained DNNs where appropriate. This modularity can provide more transparency and explainability compared to the monolithic approach.

The Autonomous Ecosystem

The autonomous systems industry comprises companies that either focus on developing the complete autonomy stack or specialise in individual parts of it. There is no definitive winner between these approaches, and the industry seems to have ample space for both strategies. Some companies may excel by offering comprehensive solutions, while others may find their niche by perfecting specific layers of the autonomy stack.

In conclusion, the end-to-end approach to autonomy provides a structured framework for developing autonomous systems. The integration of AI, whether through a monolithic or modular approach, offers various advantages and challenges. As the industry continues to evolve, both holistic and specialised approaches will likely coexist, driving further advancements in autonomous technology.

Dr Samson Lee

Chief Technology Evangelist | Chief Technical Officer

Dr Samson Lee is an expert in leading computer vision, machine learning, imaging and streaming media projects in Sydney and New York. Dr Lee is one of the founders of Visionary Machines, where he works on Visionary Machines’ Perception systems.

Subscribe to our newsletter

Stay up-to-date with our latest news and product developments